Hey guys!

In this post I bring you a simple solution, but one that I really liked, because it helped me to greatly reduce the processing time of an Azure Data Factory (ADF) pipeline by changing the Service Objective and resizing an Azure SQL Database using T commands -SQL before the start of processing, and returning to the original tier at the end of all processing.

To change the Azure SQL Database tier, you can use the interface of the Azure portal, Powershell, Azure CLI, Azure DevOps and a series of other alternatives, in addition to our dear Transact-SQL (T-SQL), which, in my view , is the easiest and most practical choice of all.

See how easy it is:

|

1 2 |

ALTER DATABASE [dirceuresende] MODIFY(SERVICE_OBJECTIVE = 'S3') |

The problem with all these solutions, especially in the scenario I'm talking about, is that changing the Service Objective, aka Service Tier, is not done immediately, that is, you run the command and Azure SQL will change the tier in the time that Azure sees fit. It can take 1 second, 10 seconds, 60 seconds, etc… And when the Azure SQL Database tier changes, the sessions are disconnected.

In the scenario I listed above, where I change the tier before starting to process the data, if I simply run the T-SQL command and continue processing, in the middle of it Azure will change the tier, the connection will drop for a few seconds and the bank will be unavailable for a few seconds.

You can also use Retry in all components of your pipeline and thus avoid this being a problem for you, because when the connection drops, the retry will be activated and the operations will be done again, but you would have to configure the retry in all components instead of just configuring the upsizing component, you may have been charged for data movement and resource usage of the ADF itself during this time that it processed for nothing. In addition, side effects can happen if a component is not prepared to be interrupted in the middle of execution and executed again.

Another possible solution is to place a Wait operator in Azure Data Factory and specify any amount of time that you think is sufficient for Azure to change the tier. In my tests, the time required for Azure SQL Database to perform the tier switch is usually somewhere between 50 and 90 seconds.

While this may work in some cases (and not in others), this solution doesn't seem to me to be very reliable. If the time to change the tier exceeds the limit I set, I will have waited a long time and it will still fail in the middle of processing. And if the change ends sooner, I will have waited a long time unnecessarily.

I looked for some solutions to solve my problem and ended up falling for the idea of Data Platform MVP Greg Low in this post here, but I chose to create my procedure in order to have a simpler solution to try to solve this problem.

Stored Procedure source code

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

CREATE PROCEDURE dbo.stpAltera_Tier_DB ( @ServiceLevelObjective VARCHAR(50), @TimeoutEmSegundos INT = 60 ) AS BEGIN SET NOCOUNT ON DECLARE @Query NVARCHAR(MAX), @DataHoraLimite DATETIME2 = DATEADD(SECOND, @TimeoutEmSegundos, GETDATE()), @ServiceLevelObjectiveAtual VARCHAR(20) = CONVERT(VARCHAR(100), DATABASEPROPERTYEX( DB_NAME(), 'ServiceObjective' )) IF (@ServiceLevelObjectiveAtual <> @ServiceLevelObjective) BEGIN SET @Query = N'ALTER DATABASE [' + DB_NAME() + '] MODIFY (SERVICE_OBJECTIVE = ''' + @ServiceLevelObjective + ''');' EXEC sp_executesql @Query; WHILE ((DATABASEPROPERTYEX( DB_NAME(), 'ServiceObjective' ) <> @ServiceLevelObjective) AND GETDATE() <= @DataHoraLimite) BEGIN WAITFOR DELAY '00:00:00.500'; END END END |

The use of this procedure is very simple:

|

1 2 3 |

EXEC dbo.stpAltera_Tier_DB @ServiceLevelObjective = 'S3', @TimeoutEmSegundos = 60 |

After executing the command, the procedure will wait until the change is made effective by Azure, reevaluating every 500 milliseconds if the change has already been made, respecting the defined time limit. If the limit is reached, the procedure will end execution even if the change is not yet effective. If the change is made less than the time limit, the procedure will finish executing as soon as the tier is changed, avoiding wasting time.

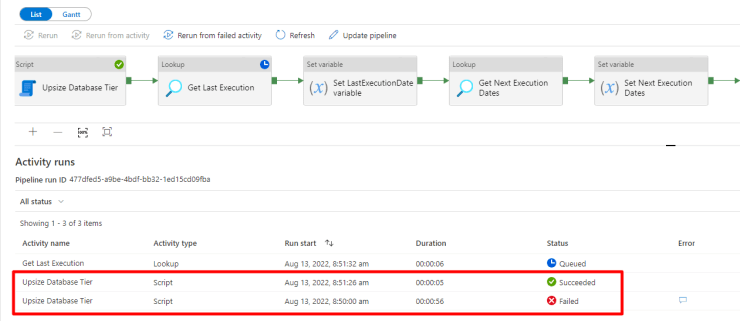

To execute this procedure through Azure Data Factory, we will use the “new” Script component, made available on March 6, 2022:

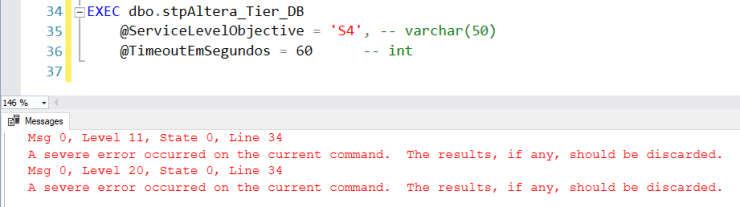

As Azure SQL Database eliminates all connections and the database is unavailable for a few seconds, even with this treatment, the procedure will return an error because its own session was eliminated:

In Azure Data Factory, it will also give an error when executing this procedure when the exchange is effective:

For this solution to work, let's define a Retry for this Script block, so that when it fails (and it will fail), it enters the Retry, waits another 10 seconds and executes the Procedure again.

In the second execution of the Stored Procedure, as the change will be made by the same tier of the previous execution, the command will be executed instantly and Azure will just ignore the command and return success in the execution, as shown below:

And with that, now your bank already has the new tier and you can start processing the data. At the end of processing, I do the downscale to return the tier to the original value, but this time, I don't have to wait for the change to finish, because I won't process anything else.

So I can use the most basic way to go back to the previous tier:

To be quite honest with you, thinking about simplicity first, not even the Stored Procedure is needed after all. As the connection is always interrupted, I can just put a simple IF with a very long WAITFOR DELAY inside the Script block and have the same behavior:

|

1 2 3 4 |

ALTER DATABASE [dirceuresende] MODIFY(SERVICE_OBJECTIVE = 'S6') IF (CONVERT(VARCHAR(100), DATABASEPROPERTYEX( DB_NAME(), 'ServiceObjective' )) <> 'S6') WAITFOR DELAY '00:10:00'; |

And just like using the Stored Procedure, the routine gave an error in the first execution, waiting until the tier change is carried out in the database. When this occurs, the connection is interrupted and the execution returns failure.

Azure Data Factory waits 10 seconds (time I set it) after the failure and tries again. This time, the execution is very fast, since the tier has already been changed to the chosen tier. This second run returns success and the pipeline cycle continues normally.

The behavior ended up being the same as the Procedure, but much simpler. I put a very long wait (10 minutes), which will end up being the time limit that Azure will have to make the change, which is more than enough. Finishing the change before, the cycle continues without having to wait those 10 minutes.

It turned out that the solution was even simpler than I thought. Now you can increase the tier of your Azure SQL Database before starting the ETL processing using Azure Data Factory, so that the processing is faster, and at the end of the processing, you go back to the original tier, paying more only during the time spent processing data. A smart way to have a much better performance paying much less 🙂

I hope you enjoyed this tip and until next time.