Hey guys!

In this article I want to share with you a tip that most people who work with Analysis Services don't know about this possibility, although they always wanted to have something like this, which is to monitor the progress of cube processing by SQL Server.

Most BI professionals these days just monitor how long it took to process the cube/database as a whole, but they don't know how long it took each dimension, each fact, and each cube within a database and this is what I'm going to show you how. do in this post.

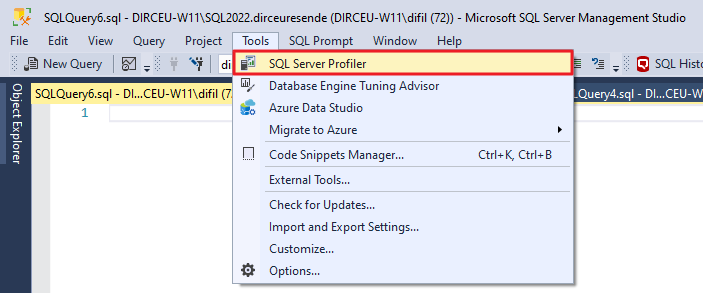

The first thing we're going to do is open the SQL Server Profiler to monitor cube processing:

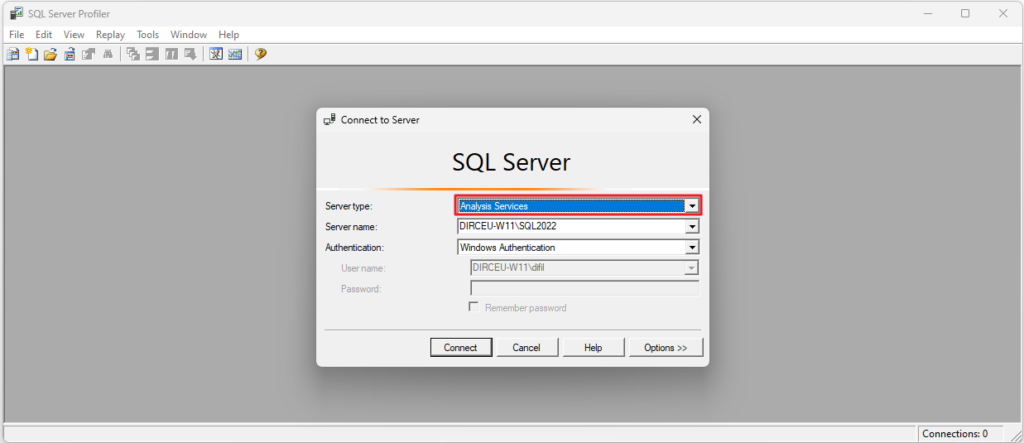

On the login screen, remember to change the connection type to “Analysis Services”, enter the name of the instance you are going to connect to and configure the authentication data

Remember that Analysis Services only accepts Windows authentication (on-premises Active Directory) or Azure Active Directory.

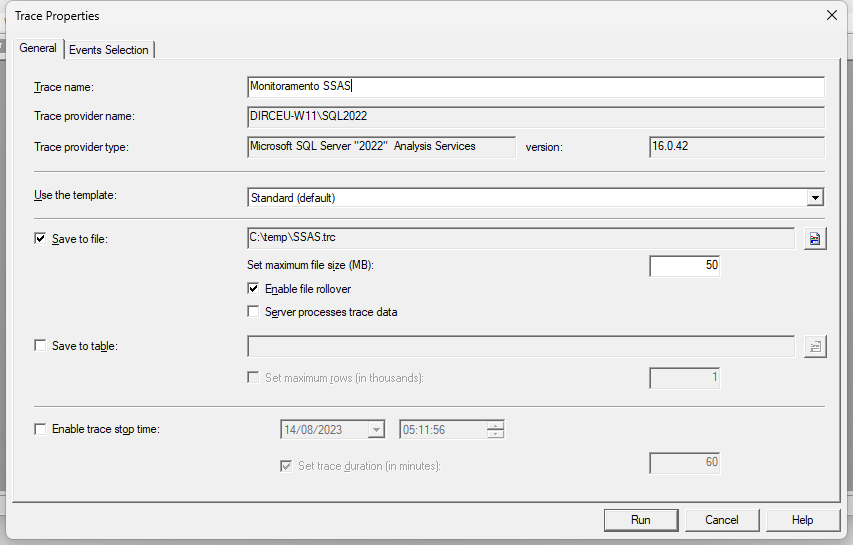

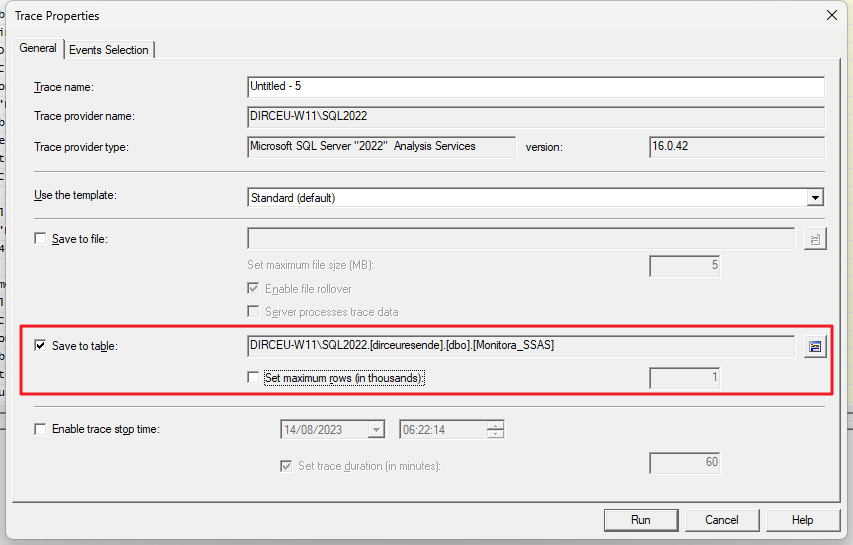

After connecting, you will be able to define a name for the monitoring and where it will be stored (bank table or physical file)

I usually save to disk and then create a reading routine via Job, just to not be writing data to the database all the time, but for traces coming from Analysis Services, the function ::fn_trace_gettable, which is used to read the generated files, does not work:

File ‘C:\temp\SSAS.trc’ is not a recognizable trace file.

So I recommend already configuring the monitoring to save the data in a database table directly.

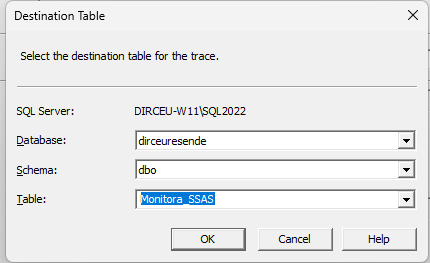

The data export table is configured:

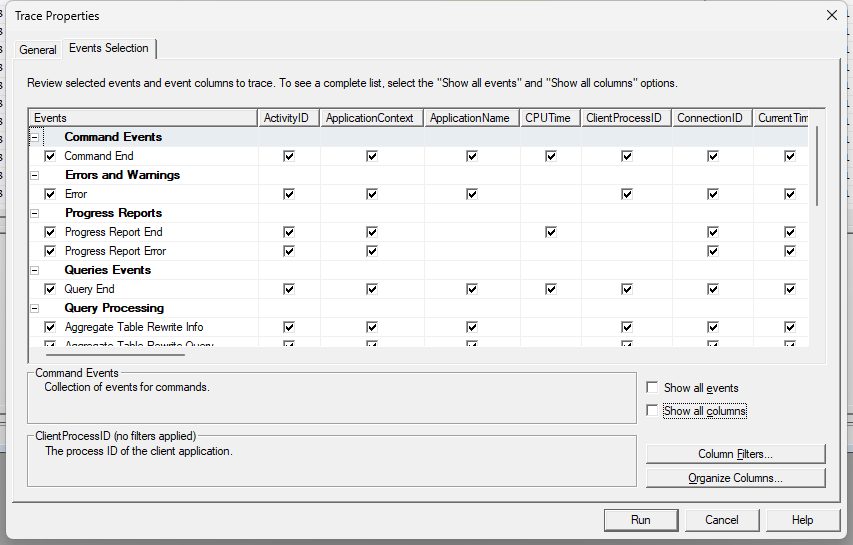

Now click on the “Event Selection” tab to select the events you will monitor.

Generally I mark only the “Error” and “End” events from the categories below:

- Command Events

- Errors and Warnings

- Progress Reports

- Queries Events

- Query Processing

You can change these captured events depending on the level of detail you want to monitor.

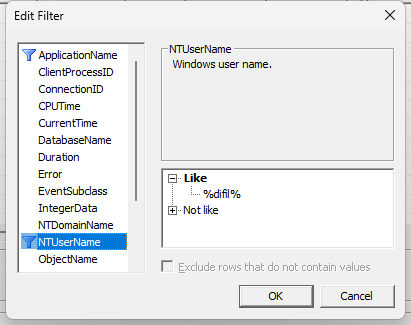

By clicking on the “Column filters” button, you can apply filters like user name, software name, hostname, session ID, etc.

In the case below, I will apply a filter to return only queries made by my user. This is useful to avoid catching events from other users accessing the cube while you are processing and skewing the results.

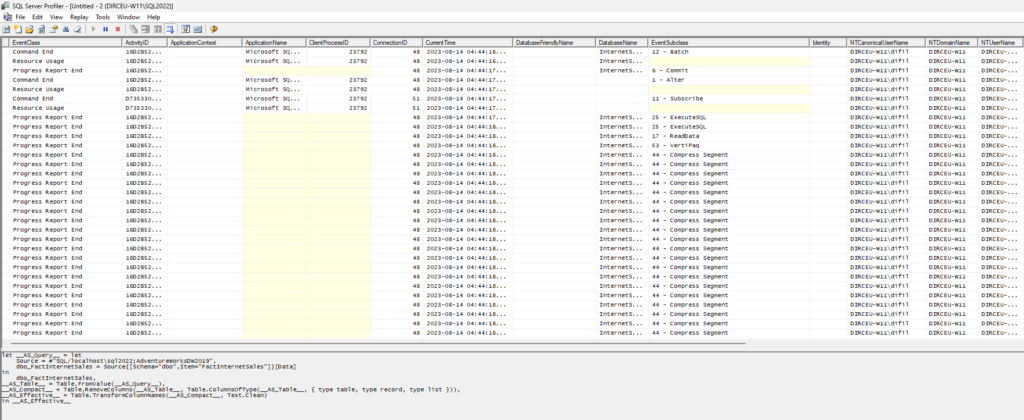

I click on “Run” to start monitoring and now I start processing the cube.

You will see a screen like the one below, showing the monitoring result.

Although it's easy to visualize the data, I prefer to query through SQL Server, where I can group, add, filter and transform the data as needed.

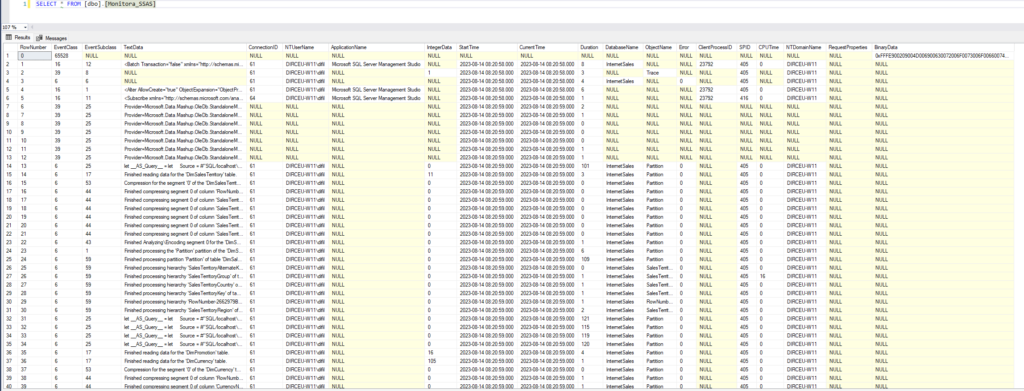

Doing a basic query on the created table, we can already visualize a good part of the data:

But now we need to include descriptions of event classes and subclasses, to make the data easier to understand.

First, let's create 2 tables: ProfilerEventClass and ProfilerEventSubClass, which will store the descriptions of the events that occur during the processing of a cube and insert the most common types of events.

Script for creating tables and inserting data:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 |

/* ************************************************************************* ProfilerEventClass Table and Data ************************************************************************* */ IF EXISTS(SELECT * FROM [sys].[objects] WHERE [object_id] = OBJECT_ID(N'[dbo].[ProfilerEventClass]') AND [type] IN ( N'U' )) DROP TABLE [dbo].[ProfilerEventClass]; CREATE TABLE [dbo].[ProfilerEventClass] ( [EventClassID] [INT] NOT NULL, [Name] [NVARCHAR](50) NULL, [Description] [NVARCHAR](500) NULL, CONSTRAINT [PK_ProfilerEventClass] PRIMARY KEY CLUSTERED ([EventClassID] ASC) WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ) INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (1, 'Audit Login', 'Collects all new connection events since the trace was started, such as when a client requests a connection to a server running an instance of SQL Server.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (2, 'Audit Logout', 'Collects all new disconnect events since the trace was started, such as when a client issues a disconnect command.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (4, 'Audit Server Starts And Stops', 'Records service shut down, start, and pause activities.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (18, 'Audit Object Permission Event', 'Records object permission changes.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (19, 'Audit Backup/Restore Event', 'Records server backup/restore.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (5, 'Progress Report Begin', 'Progress report begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (6, 'Progress Report End', 'Progress report end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (7, 'Progress Report Current', 'Progress report current.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (8, 'Progress Report Error', 'Progress report error.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (9, 'Query Begin', 'Query begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (10, 'Query End', 'Query end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (15, 'Command Begin', 'Command begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (16, 'Command End', 'Command end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (17, 'Error', 'Server error.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (33, 'Server State Discover Begin', 'Start of Server State Discover.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (34, 'Server State Discover Data', 'Contents of the Server State Discover Response.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (35, 'Server State Discover End', 'End of Server State Discover.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (36, 'Discover Begin', 'Start of Discover Request.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (38, 'Discover End', 'End of Discover Request.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (39, 'Notification', 'Notification event.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (40, 'User Defined', 'User defined Event.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (41, 'Existing Connection', 'Existing user connection.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (42, 'Existing Session', 'Existing session.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (43, 'Session Initialize', 'Session Initialize.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (50, 'Deadlock', 'Metadata locks deadlock.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (51, 'Lock timeout', 'Metadata lock timeout.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (70, 'Query Cube Begin', 'Query cube begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (71, 'Query Cube End', 'Query cube end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (72, 'Calculate Non Empty Begin', 'Calculate non empty begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (73, 'Calculate Non Empty Current', 'Calculate non empty current.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (74, 'Calculate Non Empty End', 'Calculate non empty end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (75, 'Serialize Results Begin', 'Serialize results begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (76, 'Serialize Results Current', 'Serialize results current.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (77, 'Serialize Results End', 'Serialize results end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (78, 'Execute MDX Script Begin', 'Execute MDX script begin.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (79, 'Execute MDX Script Current', 'Execute MDX script current.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (80, 'Execute MDX Script End', 'Execute MDX script end.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (81, 'Query Dimension', 'Query dimension.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (11, 'Query Subcube', 'Query subcube, for Usage Based Optimization.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (12, 'Query Subcube Verbose', 'Query subcube with detailed information. This event may have a negative impact on performance when turned on.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (60, 'Get Data From Aggregation', 'Answer query by getting data from aggregation. This event may have a negative impact on performance when turned on.') INSERT INTO [dbo].[ProfilerEventClass] ([EventClassID],[Name],[Description]) VALUES (61, 'Get Data From Cache', 'Answer query by getting data from one of the caches. This event may have a negative impact on performance when turned on.') /* ************************************************************************* ProfilerEventSubClass Table and Data ************************************************************************* */ IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[ProfilerEventSubClass]') AND type in (N'U')) DROP TABLE [dbo].[ProfilerEventSubClass] CREATE TABLE [dbo].[ProfilerEventSubClass] ( [EventClassID] [INT] NOT NULL, [EventSubClassID] [INT] NOT NULL, [Name] [NVARCHAR](50) NULL, CONSTRAINT [PK_ProfilerEventSubClass] PRIMARY KEY CLUSTERED ( [EventClassID] ASC, [EventSubClassID] ASC ) WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON ) ) INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (4, 1, 'Instance Shutdown') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (4, 2, 'Instance Started') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (4, 3, 'Instance Paused') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (4, 4, 'Instance Continued') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (19, 1, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (19, 2, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (19, 3, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 1, 'Process') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 2, 'Merge') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 3, 'Delete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 4, 'DeleteOldAggregations') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 5, 'Rebuild') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 6, 'Commit') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 7, 'Rollback') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 8, 'CreateIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 9, 'CreateTable') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 10, 'InsertInto') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 11, 'Transaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 12, 'Initialize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 13, 'Discretize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 14, 'Query') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 15, 'CreateView') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 16, 'WriteData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 17, 'ReadData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 18, 'GroupData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 19, 'GroupDataRecord') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 20, 'BuildIndex') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 21, 'Aggregate') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 22, 'BuildDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 23, 'WriteDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 24, 'BuildDMDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 25, 'ExecuteSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 26, 'ExecuteModifiedSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 27, 'Connecting') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 28, 'BuildAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 29, 'MergeAggsOnDisk') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 30, 'BuildIndexForRigidAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 31, 'BuildIndexForFlexibleAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 32, 'WriteAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 33, 'WriteSegment') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 34, 'DataMiningProgress') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 35, 'ReadBufferFullReport') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 36, 'ProactiveCacheConversion') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 37, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 38, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 39, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (5, 40, 'Build Processing Schedule') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 1, 'Process') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 2, 'Merge') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 3, 'Delete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 4, 'DeleteOldAggregations') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 5, 'Rebuild') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 6, 'Commit') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 7, 'Rollback') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 8, 'CreateIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 9, 'CreateTable') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 10, 'InsertInto') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 11, 'Transaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 12, 'Initialize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 13, 'Discretize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 14, 'Query') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 15, 'CreateView') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 16, 'WriteData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 17, 'ReadData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 18, 'GroupData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 19, 'GroupDataRecord') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 20, 'BuildIndex') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 21, 'Aggregate') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 22, 'BuildDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 23, 'WriteDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 24, 'BuildDMDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 25, 'ExecuteSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 26, 'ExecuteModifiedSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 27, 'Connecting') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 28, 'BuildAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 29, 'MergeAggsOnDisk') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 30, 'BuildIndexForRigidAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 31, 'BuildIndexForFlexibleAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 32, 'WriteAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 33, 'WriteSegment') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 34, 'DataMiningProgress') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 35, 'ReadBufferFullReport') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 36, 'ProactiveCacheConversion') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 37, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 38, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 39, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (6, 40, 'Build Processing Schedule') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 1, 'Process') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 2, 'Merge') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 3, 'Delete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 4, 'DeleteOldAggregations') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 5, 'Rebuild') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 6, 'Commit') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 7, 'Rollback') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 8, 'CreateIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 9, 'CreateTable') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 10, 'InsertInto') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 11, 'Transaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 12, 'Initialize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 13, 'Discretize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 14, 'Query') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 15, 'CreateView') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 16, 'WriteData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 17, 'ReadData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 18, 'GroupData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 19, 'GroupDataRecord') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 20, 'BuildIndex') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 21, 'Aggregate') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 22, 'BuildDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 23, 'WriteDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 24, 'BuildDMDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 25, 'ExecuteSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 26, 'ExecuteModifiedSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 27, 'Connecting') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 28, 'BuildAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 29, 'MergeAggsOnDisk') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 30, 'BuildIndexForRigidAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 31, 'BuildIndexForFlexibleAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 32, 'WriteAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 33, 'WriteSegment') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 34, 'DataMiningProgress') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 35, 'ReadBufferFullReport') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 36, 'ProactiveCacheConversion') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 37, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 38, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 39, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (7, 40, 'Build Processing Schedule') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 1, 'Process') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 2, 'Merge') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 3, 'Delete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 4, 'DeleteOldAggregations') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 5, 'Rebuild') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 6, 'Commit') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 7, 'Rollback') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 8, 'CreateIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 9, 'CreateTable') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 10, 'InsertInto') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 11, 'Transaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 12, 'Initialize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 13, 'Discretize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 14, 'Query') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 15, 'CreateView') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 16, 'WriteData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 17, 'ReadData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 18, 'GroupData') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 19, 'GroupDataRecord') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 20, 'BuildIndex') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 21, 'Aggregate') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 22, 'BuildDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 23, 'WriteDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 24, 'BuildDMDecode') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 25, 'ExecuteSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 26, 'ExecuteModifiedSQL') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 27, 'Connecting') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 28, 'BuildAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 29, 'MergeAggsOnDisk') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 30, 'BuildIndexForRigidAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 31, 'BuildIndexForFlexibleAggs') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 32, 'WriteAggsAndIndexes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 33, 'WriteSegment') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 34, 'DataMiningProgress') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 35, 'ReadBufferFullReport') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 36, 'ProactiveCacheConversion') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 37, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 38, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 39, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (8, 40, 'Build Processing Schedule') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (9, 0, 'MDXQuery') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (9, 1, 'DMXQuery') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (9, 2, 'SQLQuery') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (10, 0, 'MDXQuery') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (10, 1, 'DMXQuery') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (10, 2, 'SQLQuery') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 0, 'Create') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 1, 'Alter') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 2, 'Delete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 3, 'Process') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 4, 'DesignAggregations') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 5, 'WBInsert') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 6, 'WBUpdate') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 7, 'WBDelete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 8, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 9, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 10, 'MergePartitions') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 11, 'Subscribe') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 12, 'Batch') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 13, 'BeginTransaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 14, 'CommitTransaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 15, 'RollbackTransaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 16, 'GetTransactionState') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 17, 'Cancel') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 18, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 19, 'Import80MiningModels') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (15, 10000, 'Other') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 0, 'Create') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 1, 'Alter') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 2, 'Delete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 3, 'Process') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 4, 'DesignAggregations') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 5, 'WBInsert') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 6, 'WBUpdate') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 7, 'WBDelete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 8, 'Backup') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 9, 'Restore') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 10, 'MergePartitions') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 11, 'Subscribe') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 12, 'Batch') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 13, 'BeginTransaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 14, 'CommitTransaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 15, 'RollbackTransaction') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 16, 'GetTransactionState') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 17, 'Cancel') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 18, 'Synchronize') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 19, 'Import80MiningModels') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (16, 10000, 'Other') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 1, 'DISCOVER_CONNECTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 2, 'DISCOVER_SESSIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 3, 'DISCOVER_TRANSACTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 6, 'DISCOVER_DB_CONNECTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 7, 'DISCOVER_JOBS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 8, 'DISCOVER_LOCKS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 12, 'DISCOVER_PERFORMANCE_COUNTERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 13, 'DISCOVER_MEMORYUSAGE') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 14, 'DISCOVER_JOB_PROGRESS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (33, 15, 'DISCOVER_MEMORYGRANT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 1, 'DISCOVER_CONNECTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 2, 'DISCOVER_SESSIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 3, 'DISCOVER_TRANSACTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 6, 'DISCOVER_DB_CONNECTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 7, 'DISCOVER_JOBS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 8, 'DISCOVER_LOCKS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 12, 'DISCOVER_PERFORMANCE_COUNTERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 13, 'DISCOVER_MEMORYUSAGE') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 14, 'DISCOVER_JOB_PROGRESS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (34, 15, 'DISCOVER_MEMORYGRANT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 1, 'DISCOVER_CONNECTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 2, 'DISCOVER_SESSIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 3, 'DISCOVER_TRANSACTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 6, 'DISCOVER_DB_CONNECTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 7, 'DISCOVER_JOBS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 8, 'DISCOVER_LOCKS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 12, 'DISCOVER_PERFORMANCE_COUNTERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 13, 'DISCOVER_MEMORYUSAGE') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 14, 'DISCOVER_JOB_PROGRESS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (35, 15, 'DISCOVER_MEMORYGRANT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 0, 'DBSCHEMA_CATALOGS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 1, 'DBSCHEMA_TABLES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 2, 'DBSCHEMA_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 3, 'DBSCHEMA_PROVIDER_TYPES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 4, 'MDSCHEMA_CUBES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 5, 'MDSCHEMA_DIMENSIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 6, 'MDSCHEMA_HIERARCHIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 7, 'MDSCHEMA_LEVELS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 8, 'MDSCHEMA_MEASURES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 9, 'MDSCHEMA_PROPERTIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 10, 'MDSCHEMA_MEMBERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 11, 'MDSCHEMA_FUNCTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 12, 'MDSCHEMA_ACTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 13, 'MDSCHEMA_SETS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 14, 'DISCOVER_INSTANCES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 15, 'MDSCHEMA_KPIS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 16, 'MDSCHEMA_MEASUREGROUPS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 17, 'MDSCHEMA_COMMANDS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 18, 'DMSCHEMA_MINING_SERVICES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 19, 'DMSCHEMA_MINING_SERVICE_PARAMETERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 20, 'DMSCHEMA_MINING_FUNCTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 21, 'DMSCHEMA_MINING_MODEL_CONTENT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 22, 'DMSCHEMA_MINING_MODEL_XML') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 23, 'DMSCHEMA_MINING_MODELS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 24, 'DMSCHEMA_MINING_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 25, 'DISCOVER_DATASOURCES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 26, 'DISCOVER_PROPERTIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 27, 'DISCOVER_SCHEMA_ROWSETS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 28, 'DISCOVER_ENUMERATORS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 29, 'DISCOVER_KEYWORDS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 30, 'DISCOVER_LITERALS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 31, 'DISCOVER_XML_METADATA') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 32, 'DISCOVER_TRACES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 33, 'DISCOVER_TRACE_DEFINITION_PROVIDERINFO') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 34, 'DISCOVER_TRACE_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 35, 'DISCOVER_TRACE_EVENT_CATEGORIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 36, 'DMSCHEMA_MINING_STRUCTURES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 37, 'DMSCHEMA_MINING_STRUCTURE_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 38, 'DISCOVER_MASTER_KEY') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 39, 'MDSCHEMA_INPUT_DATASOURCES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 40, 'DISCOVER_LOCATIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 41, 'DISCOVER_PARTITION_DIMENSION_STAT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 42, 'DISCOVER_PARTITION_STAT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (36, 43, 'DISCOVER_DIMENSION_STAT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 0, 'DBSCHEMA_CATALOGS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 1, 'DBSCHEMA_TABLES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 2, 'DBSCHEMA_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 3, 'DBSCHEMA_PROVIDER_TYPES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 4, 'MDSCHEMA_CUBES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 5, 'MDSCHEMA_DIMENSIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 6, 'MDSCHEMA_HIERARCHIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 7, 'MDSCHEMA_LEVELS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 8, 'MDSCHEMA_MEASURES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 9, 'MDSCHEMA_PROPERTIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 10, 'MDSCHEMA_MEMBERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 11, 'MDSCHEMA_FUNCTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 12, 'MDSCHEMA_ACTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 13, 'MDSCHEMA_SETS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 14, 'DISCOVER_INSTANCES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 15, 'MDSCHEMA_KPIS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 16, 'MDSCHEMA_MEASUREGROUPS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 17, 'MDSCHEMA_COMMANDS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 18, 'DMSCHEMA_MINING_SERVICES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 19, 'DMSCHEMA_MINING_SERVICE_PARAMETERS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 20, 'DMSCHEMA_MINING_FUNCTIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 21, 'DMSCHEMA_MINING_MODEL_CONTENT') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 22, 'DMSCHEMA_MINING_MODEL_XML') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 23, 'DMSCHEMA_MINING_MODELS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 24, 'DMSCHEMA_MINING_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 25, 'DISCOVER_DATASOURCES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 26, 'DISCOVER_PROPERTIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 27, 'DISCOVER_SCHEMA_ROWSETS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 28, 'DISCOVER_ENUMERATORS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 29, 'DISCOVER_KEYWORDS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 30, 'DISCOVER_LITERALS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 31, 'DISCOVER_XML_METADATA') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 32, 'DISCOVER_TRACES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 33, 'DISCOVER_TRACE_DEFINITION_PROVIDERINFO') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 34, 'DISCOVER_TRACE_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 35, 'DISCOVER_TRACE_EVENT_CATEGORIES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 36, 'DMSCHEMA_MINING_STRUCTURES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 37, 'DMSCHEMA_MINING_STRUCTURE_COLUMNS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 38, 'DISCOVER_MASTER_KEY') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 39, 'MDSCHEMA_INPUT_DATASOURCES') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (38, 40, 'DISCOVER_LOCATIONS') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 0, 'Proactive Caching Begin') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 1, 'Proactive Caching End') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 2, 'Flight Recorder Started') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 3, 'Flight Recorder Stopped') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 4, 'Configuration Properties Updated') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 5, 'SQL Trace') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 6, 'Object Created') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 7, 'Object Deleted') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 8, 'Object Altered') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 9, 'Proactive Caching Polling Begin') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 10, 'Proactive Caching Polling End') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 11, 'Flight Recorder Snapshot Begin') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 12, 'Flight Recorder Snapshot End') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 13, 'Proactive Caching: notifiable object updated') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 14, 'Lazy Processing: start processing') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (39, 15, 'Lazy Processing: processing complete') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (73, 1, 'Get Data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (73, 2, 'Process Calculated Members') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (73, 3, 'Post Order') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (76, 1, 'Serialize Axes') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (76, 2, 'Serialize Cells') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (76, 3, 'Serialize SQL Rowset') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (76, 4, 'Serialize Flattened Rowset') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (81, 1, 'Cache data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (81, 2, 'Non-cache data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (11, 1, 'Cache data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (11, 2, 'Non-cache data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (11, 3, 'Internal data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (11, 4, 'SQL data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (11, 11, 'Measure Group Structural Change') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (11, 12, 'Measure Group Deletion') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (12, 21, 'Cache data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (12, 22, 'Non-cache data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (12, 23, 'Internal data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (12, 24, 'SQL data') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (61, 1, 'Get data from measure group cache') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (61, 2, 'Get data from flat cache') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (61, 3, 'Get data from calculation cache') INSERT INTO [dbo].[ProfilerEventSubClass] ([EventClassID], [EventSubClassID],[Name]) VALUES (61, 4, 'Get data from persisted cache') |

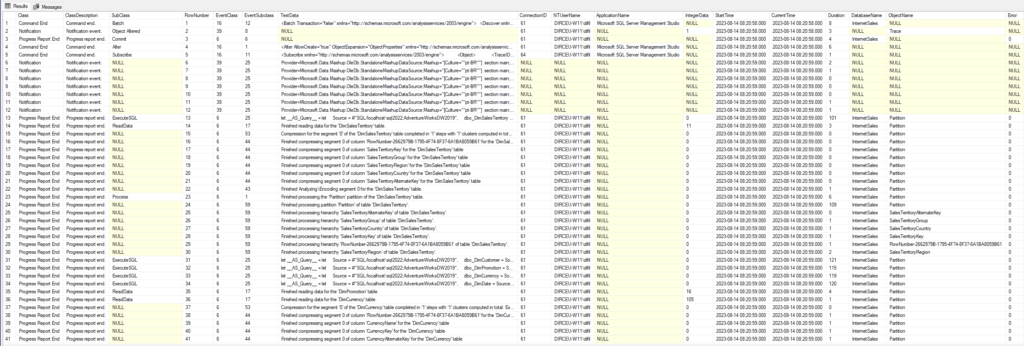

Now I can better interpret the results by joining the data from my monitoring table with these 2 created, according to the example below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

SELECT B.[Name] AS [Class], B.[Description] AS [ClassDescription], C.[Name] AS [SubClass], [A].[RowNumber], [A].[EventClass], [A].[EventSubclass], [A].[TextData], [A].[ConnectionID], [A].[NTUserName], [A].[ApplicationName], [A].[IntegerData], [A].[StartTime], [A].[CurrentTime], [A].[Duration], [A].[DatabaseName], [A].[ObjectName], [A].[Error], [A].[ClientProcessID], [A].[SPID], [A].[CPUTime], [A].[NTDomainName] FROM [dbo].[Monitora_SSAS] A JOIN [dbo].[ProfilerEventClass] B ON A.[EventClass] = B.[EventClassID] LEFT JOIN [dbo].[ProfilerEventSubClass] C ON A.[EventSubclass] = C.[EventSubClassID] AND [B].[EventClassID] = [C].[EventClassID] ORDER BY [A].[RowNumber] |

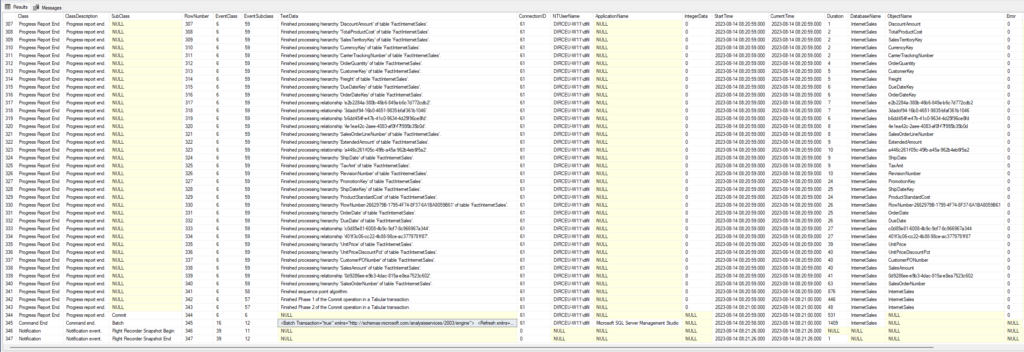

And it returns a table like this one:

Some important points to highlight about the returned data:

- The “Duration” and “CPU Time” columns are measured in milliseconds

- The “IntegerData” column returns the number of rows processed (only works in some events, such as ReadData)

- The “SPID” column is the session ID of the running user. It can be used to isolate some specific execution, when there are several operations being executed at the same time on the server.

- I did not include the “Begin” or “Current” events, only the “End” events, as it is only in this step that the “Duration” and “CPU Time” columns return data.

- In “End” events, the “StartTime” column is when the operation started, and the “CurrentTime” column is when it ended

Pay close attention not to consume too much disk space with these logs and this ends up becoming a problem in the future.

And that's it, folks!

I hope you liked this tip and now you can monitor your cube processing.